In today’s digital age, the intersection of artificial intelligence (AI) and cybersecurity has become increasingly crucial. With the rise of sophisticated cyber threats and the rapid advancement of AI technologies, ensuring safe, secure, and trustworthy development has become an imperative for organizations and individuals worldwide.

A notable fact about AI and cybersecurity is that AI itself can be both a tool for enhancing cybersecurity defenses and a potential vector for cyber attacks. While AI-powered technologies can analyze vast amounts of data and detect anomalies more efficiently than humans, they can also be manipulated to exploit vulnerabilities and launch cyber attacks on a large scale.

Introduction

AI and cybersecurity are two critical areas of technology that have a profound impact on the digital landscape. As artificial intelligence continues to advance, it is increasingly being deployed in various industries, revolutionizing processes, and creating new possibilities. However, with the rise of AI comes new challenges in ensuring the safety, security, and trustworthiness of its development. Cybersecurity plays a pivotal role in safeguarding AI systems, data, and networks from malicious activities and potential threats.

In this article, we will explore the intersection of AI and cybersecurity and discuss the measures and strategies required to ensure safe, secure, and trustworthy development. We will delve into the various aspects of AI and cybersecurity, from the potential risks and vulnerabilities to the importance of robust defense mechanisms. By understanding the challenges and best practices in this evolving landscape, experts can effectively navigate the complexities and protect against cyber threats.

AI and Cybersecurity: A Symbiotic Relationship

The relationship between AI and cybersecurity is symbiotic, with each influencing and benefiting from the other. AI technologies can enhance cybersecurity efforts by bolstering defense mechanisms and providing proactive threat detection and response capabilities. Conversely, cybersecurity measures are crucial in securing AI algorithms, training data, and deployment systems. Let’s explore this relationship further.

AI-Powered Cybersecurity Solutions

AI-powered cybersecurity solutions utilize machine learning algorithms and other AI technologies to analyze vast amounts of data, identify patterns, and detect cyber threats. These solutions can continuously learn and adapt to evolving threats, providing real-time detection, prevention, and response capabilities. By leveraging AI, cybersecurity professionals can detect and mitigate threats more efficiently, reducing the time and effort required to combat advanced persistent threats (APTs) and other sophisticated attacks.

One significant advantage of AI-powered cybersecurity solutions is their ability to detect previously unknown or zero-day vulnerabilities. Traditional rule-based detection systems rely on known attack patterns, making them vulnerable to new and evolving threats. In contrast, AI-powered systems can analyze patterns and behaviors to identify anomalies and potential threats. This proactive approach enhances the overall effectiveness of cybersecurity defenses, staying one step ahead of attackers.

Furthermore, AI-powered solutions can automate routine security tasks, freeing up cybersecurity professionals to focus on more complex and strategic activities. This automation streamlines processes and improves efficiency, allowing organizations to respond to threats quickly and effectively. From monitoring network activity to analyzing logs and generating incident reports, AI technologies can significantly enhance the operational capabilities of cybersecurity teams.

However, it’s important to note that AI-powered cybersecurity solutions are not infallible. They can also be exploited by malicious actors to devise sophisticated attacks. Adversarial AI, for example, involves exploiting the vulnerabilities of AI algorithms to manipulate or deceive the system. This necessitates continuous research and development to improve the resilience and robustness of AI-powered security solutions.

Securing AI Systems and Data

Cybersecurity plays a critical role in securing AI systems and data throughout their lifecycle, from development to deployment. The following are key considerations for ensuring the security and trustworthiness of AI:

- Data Privacy: Protecting sensitive data used for AI training and implementation is crucial. Encryption, access controls, and data anonymization techniques should be employed to safeguard personally identifiable information (PII) and other sensitive data.

- Secure Development Practices: Incorporating security best practices during the development of AI systems is essential. This includes conducting security assessments, implementing secure coding practices, and regularly updating software and firmware to address vulnerabilities.

- Robust Authentication: Strong authentication measures, such as multifactor authentication (MFA) and biometric identification, should be implemented to control access to AI systems and prevent unauthorized tampering.

- Defensive AI: AI systems can be trained to detect and defend against adversarial attacks. By incorporating adversarial training and robust anomaly detection techniques, AI systems can better withstand sophisticated attacks.

Additionally, organizations should prioritize continuous monitoring and auditing of AI systems to detect and respond to any potential security breaches. By adopting a proactive and layered security approach, organizations can minimize the risk of AI-based vulnerabilities and ensure the overall integrity of their AI systems and data.

The Challenges of AI and Cybersecurity

While the integration of AI and cybersecurity offers numerous benefits, it also presents several challenges that need to be addressed. Let’s explore some of the key challenges associated with AI and cybersecurity:

Data Security and Privacy

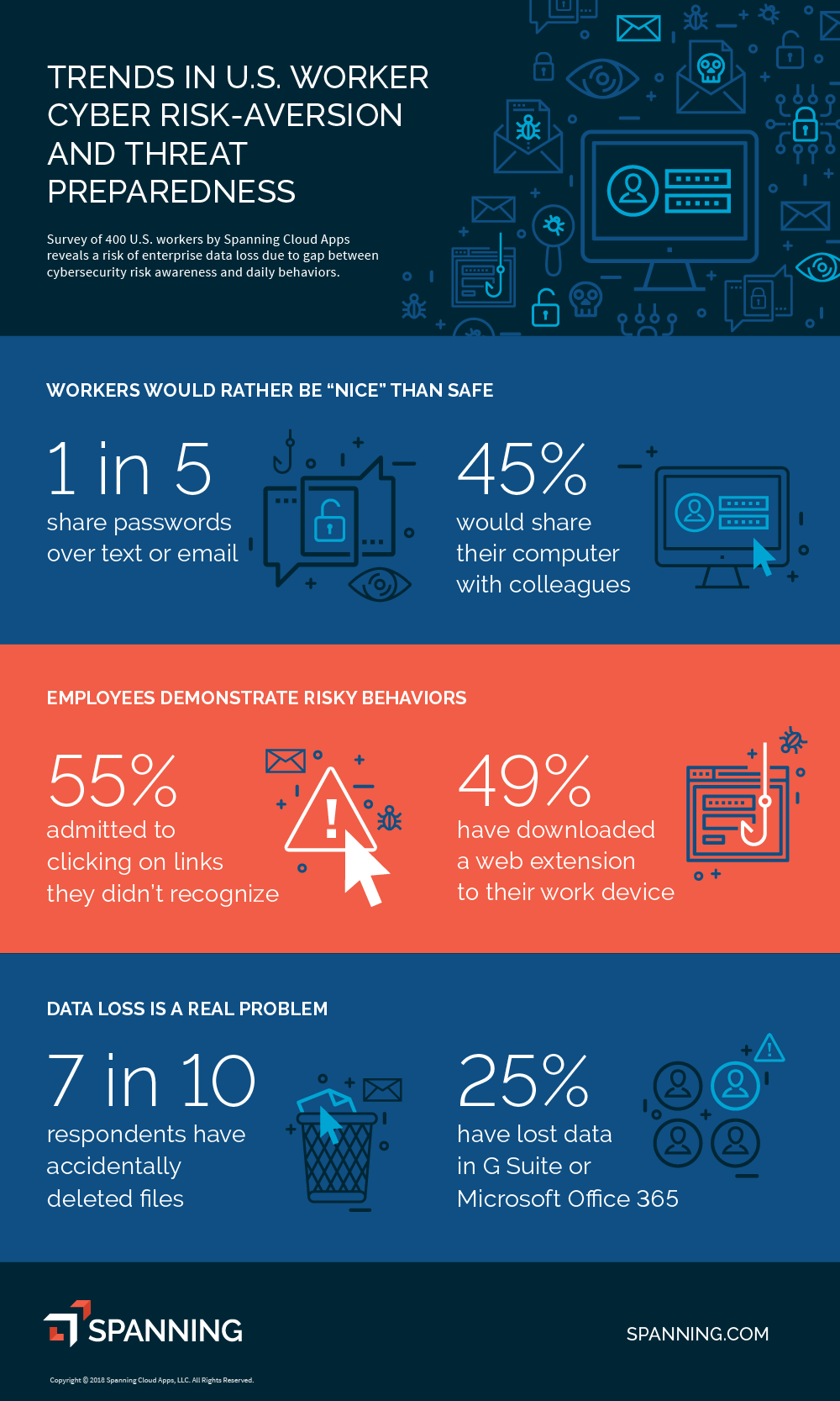

The use of AI relies heavily on data, and ensuring the security and privacy of that data is paramount. Organizations must implement robust data protection measures to safeguard sensitive information from unauthorized access, breaches, and misuse. This includes encryption, access controls, data anonymization, and compliance with relevant data protection regulations.

Additionally, as AI systems become more advanced and capable of processing vast amounts of data, concerns about data privacy and ethical use of data arise. It is crucial to establish transparent and accountable practices for data collection, use, and sharing, ensuring proper consent and permission are obtained from individuals.

Moreover, adversarial attacks on AI systems can compromise data integrity and confidentiality. Adversaries may attempt to manipulate training data or insert malicious inputs to deceive AI algorithms. Protecting against such attacks requires robust security measures that can detect and mitigate adversarial activities.

Overall, addressing the challenges of data security and privacy in the context of AI is crucial to building trust and ensuring the responsible and ethical use of AI technologies.

Bias and Fairness

AI algorithms and models are developed based on training data, which can introduce biases and perpetuate existing social biases and inequalities. This raises concerns about fairness and discrimination in AI decision-making processes. It is essential to address these biases and ensure that AI systems are designed and implemented in a way that is fair, transparent, and accountable.

Responsible AI development involves establishing diverse and inclusive development teams, rigorously testing and validating AI algorithms for bias, and implementing measures to mitigate biases during training and deployment. Regular audits and monitoring of AI systems can help identify and rectify any biases that may emerge over time.

Lack of Explainability

AI systems, particularly those powered by deep learning algorithms, can be complex and opaque, making it challenging to understand their decision-making processes. This lack of explainability can limit the ability to detect and address biases, errors, or malicious behaviors in AI systems.

Experts and researchers are actively working on developing explainable AI (XAI) methods and techniques that enhance the interpretability and transparency of AI systems. XAI aims to provide insights into how AI algorithms arrive at their decisions, enabling humans to understand, validate, and trust the outputs of AI models.

Ensuring Safe and Trustworthy AI Development

As the field of AI continues to evolve, it is crucial to prioritize safe and trustworthy development practices. This involves considering the following:

Ethical Considerations

AI developers, researchers, and organizations must address the ethical implications of AI technologies. This includes promoting ethical use, transparency, and accountability in AI development and deployment. Establishing ethical guidelines and frameworks can help ensure responsible AI development practices and prevent the misuse of AI for harmful purposes.

Collaboration and Knowledge Sharing

Given the rapid pace of AI development and the evolving nature of cybersecurity threats, collaboration and knowledge sharing are vital. Experts, researchers, and organizations should actively collaborate to share best practices, insights, and lessons learned. This collective effort can help the industry stay ahead of emerging threats and develop innovative solutions to address them.

Continuous Education and Training

AI professionals and cybersecurity experts must commit to continuous education and training to stay updated on the latest developments, techniques, and threats. This includes understanding the legal, regulatory, and compliance aspects of AI and cybersecurity and staying informed about emerging technologies, frameworks, and standards.

Adopting a Risk-Based Approach

The dynamic and evolving nature of AI and cybersecurity necessitates a risk-based approach to development and defense. Organizations should conduct regular risk assessments to identify potential vulnerabilities, prioritize resources and investments, and develop mitigation strategies. This approach ensures a proactive and adaptive stance towards AI and cybersecurity.

Conclusion

In conclusion, the integration of AI and cybersecurity is crucial for ensuring safe, secure, and trustworthy development. AI-powered cybersecurity solutions offer advanced threat detection and response capabilities, while robust cybersecurity measures are needed to secure AI systems and data. However, the challenges of data security, bias, and lack of explainability must be addressed to ensure ethical and responsible AI development. By prioritizing ethical considerations, collaboration, continuous education, and a risk-based approach, experts can navigate the complexities of AI and cybersecurity and build a secure digital future.

To learn more about the latest trends and best practices in AI and cybersecurity, visit [link text](https://www.example.com).

Key Takeaways

- AI can greatly enhance cybersecurity measures by detecting and responding to threats in real time.

- However, AI can also be vulnerable to attacks, so it’s important to ensure its development is safe and secure.

- Trustworthy AI systems should be transparent and explainable, so users can understand their decision-making processes.

- Cybersecurity professionals must stay up-to-date with the latest AI technologies and potential risks to effectively protect against them.

- A collaborative approach between AI developers, cybersecurity experts, and policymakers is crucial for creating a secure and trustworthy AI ecosystem.

AI and cybersecurity go hand in hand to protect our digital world.

AI is being used to detect and counter cyber threats, ensuring safe and secure development.

It is important to build trust in AI systems and ensure that they are accountable and transparent.

Organizations must prioritize cybersecurity and invest in robust defenses and protocols.

Training AI systems on diverse datasets and continuously updating them helps anticipate and mitigate emerging threats.

Collaboration between AI developers, cybersecurity experts, and policy makers is crucial for a resilient and trustworthy digital ecosystem.

By leveraging AI and cybersecurity together, we can create a safer and more secure digital future for all.