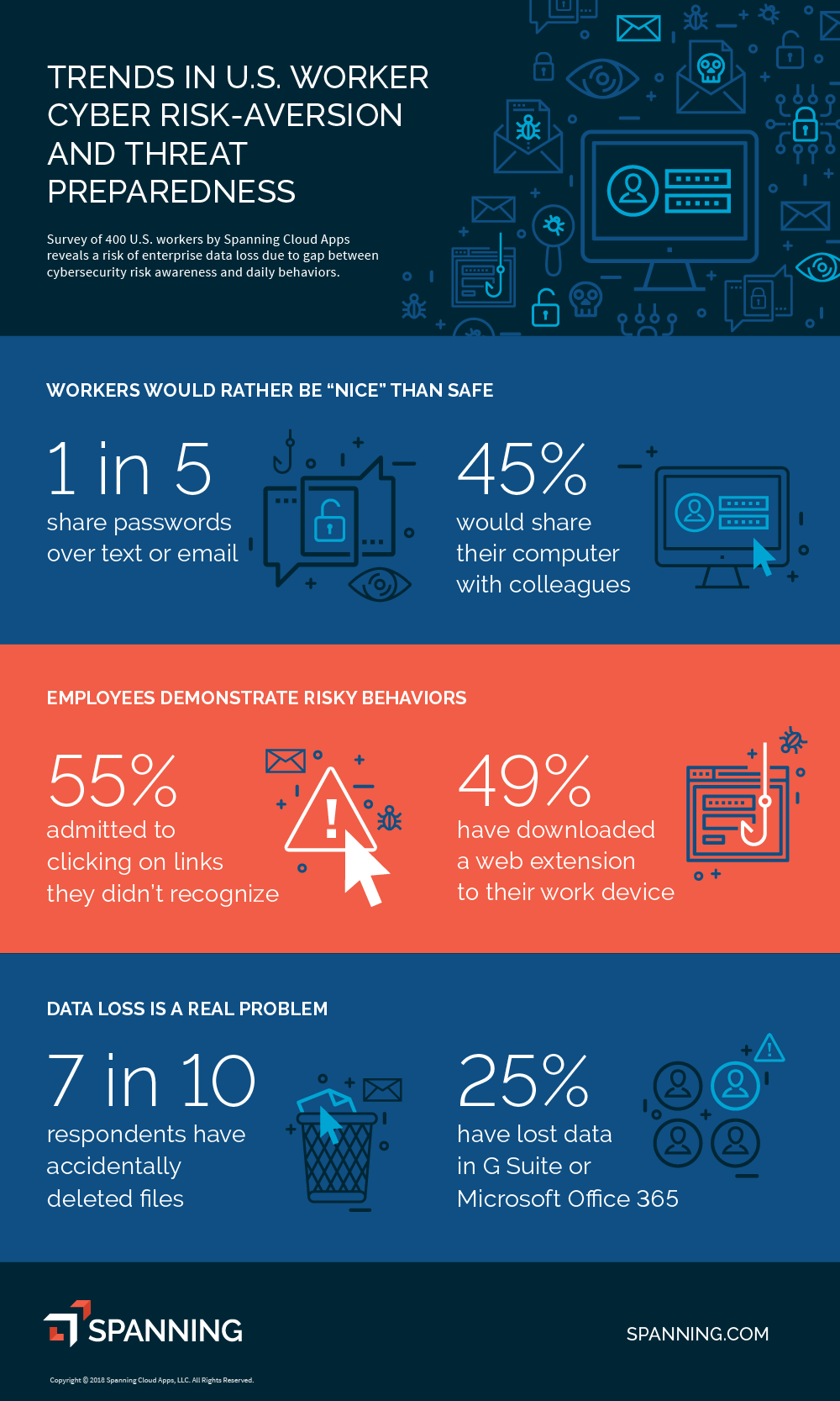

AI and cybersecurity are two interconnected fields that play a crucial role in ensuring the safe, secure, and trustworthy development of technology. As advancements in AI continue to revolutionize various industries, the need for robust cybersecurity measures becomes increasingly important. The alarming rise of cyber threats and the potential risks posed by AI-powered attacks highlight the critical need to address the intersection of AI and cybersecurity.

Over the years, AI has become a powerful tool in the hands of both cyber attackers and defenders. It has the potential to detect and prevent sophisticated cyber threats, while also being utilized by malicious actors to launch sophisticated attacks. To ensure safe and secure development, it is essential to implement AI technologies that can identify and mitigate potential risks. By integrating AI into cybersecurity systems, organizations can enhance threat detection, response times, and proactive defenses, leading to a more secure digital landscape.

AI and Cybersecurity: Implementing Effective Measures for Safe and Secure Development

In today’s digital age, the widespread adoption of artificial intelligence (AI) has revolutionized various industries, including cybersecurity. As AI technologies continue to advance, they have become invaluable tools in detecting and mitigating cyber threats. However, as AI becomes more prominent in the field of cybersecurity, it is crucial to ensure safe, secure, and trustworthy development. This article will explore the importance of AI in cybersecurity and discuss strategies for maintaining robust security measures throughout the development process.

When discussing AI and cybersecurity, it is essential to understand the role of AI in detecting and preventing cyber threats. AI-powered algorithms are designed to analyze vast amounts of data, identify patterns, and detect anomalies that may indicate potential security breaches. These algorithms can detect and respond to threats in real-time, significantly reducing response times and improving overall security. However, as AI evolves, cybercriminals also adapt their techniques to evade detection. Therefore, it is crucial to implement effective measures to ensure the safe and secure development of AI-powered cybersecurity systems.

One of the primary concerns in AI-powered cybersecurity is the potential for adversarial attacks. Adversarial attacks involve manipulating the AI algorithms to produce incorrect results or bypass the system’s security measures. These attacks aim to exploit vulnerabilities in AI systems and can have severe consequences if successful. To mitigate the risk of adversarial attacks, developers must adopt sophisticated techniques such as robust model training, data augmentation, and rigorous testing in adversarial scenarios. By implementing these measures, developers can enhance the resilience of AI-powered cybersecurity systems against adversarial attacks.

Securing AI Training Data and Models

Securing AI training data and models is crucial to ensure the integrity and reliability of AI-powered cybersecurity systems. AI algorithms heavily rely on training data to learn and make decisions. If the training data is compromised or manipulated, it can lead to biased or inaccurate predictions, potentially compromising cybersecurity efforts. Therefore, it is essential to follow best practices for securing training data.

One of the key strategies for securing AI training data is to ensure data privacy and protection. This involves implementing robust encryption techniques to safeguard sensitive data and restrict access to authorized personnel. Additionally, data anonymization can be employed to remove personally identifiable information from the training data, further protecting individual privacy.

Another critical aspect of securing AI training data is to ensure the data is representative of the real-world scenarios and diverse in nature. Biased data can result in AI algorithms making inaccurate predictions or favoring certain groups over others, which can have ethical and legal implications. Therefore, developers must carefully curate and validate their training data to minimize biases and ensure the fairness and inclusivity of AI-powered cybersecurity systems.

In addition to securing training data, developers must also implement measures to protect AI models from unauthorized access or tampering. This involves using robust authentication and authorization mechanisms to restrict access to AI models and securing the storage and transmission of model updates. By effectively securing both training data and models, developers can enhance the trustworthiness and reliability of AI-powered cybersecurity systems.

Evaluating AI Algorithms for Robustness and Resilience

Ensuring the robustness and resilience of AI algorithms is essential for the development of secure cybersecurity systems. As mentioned earlier, adversarial attacks pose a significant threat to AI-powered cybersecurity. Therefore, it is crucial to evaluate the robustness of AI algorithms and detect and mitigate vulnerabilities.

Evaluating AI algorithms for robustness involves subjecting them to various adversarial scenarios to identify potential weaknesses. This can be done through adversarial testing, where developers simulate different attack scenarios and assess the algorithm’s performance. By identifying vulnerabilities and weaknesses, developers can then implement appropriate measures to enhance the algorithm’s resilience against adversarial attacks.

Another aspect of evaluating AI algorithms for robustness is to assess their generalizability. An algorithm’s performance on the training data may not necessarily reflect its performance in real-world scenarios. Therefore, developers must evaluate the algorithm’s ability to generalize its predictions to unseen data. This can be done through cross-validation and testing on diverse datasets.

Furthermore, it is essential to regularly update and refine AI algorithms to adapt to evolving cybersecurity threats. Cybercriminals continuously develop new techniques to exploit vulnerabilities, and AI algorithms must stay ahead of these threats. Regular updates and refinements based on real-world data and emerging threat intelligence can significantly enhance the resilience and effectiveness of AI-powered cybersecurity systems.

Applying Explainable AI in Cybersecurity

Explainable AI (XAI) refers to the ability to understand and interpret the decision-making process of AI algorithms. In the context of cybersecurity, XAI plays a crucial role in enabling human experts to assess and trust the outputs of AI systems. XAI techniques aim to provide transparent and interpretable models that can explain the reasoning behind AI predictions and decisions.

By incorporating XAI techniques in AI-powered cybersecurity systems, developers can enhance trust, accountability, and understandability. XAI techniques, such as rule-based systems or visualizations, allow cybersecurity professionals to identify potential biases, understand how the AI arrived at a particular decision, and detect any potential vulnerabilities or limitations. This transparency and interpretability can help bridge the gap between AI algorithms and human experts, facilitating effective collaboration in cybersecurity operations.

Collaboration between AI and Human Experts

While AI algorithms have the ability to detect and respond to cyber threats in real-time, human expertise is still invaluable in the field of cybersecurity. Effective collaboration between AI and human experts is crucial to ensure safe, secure, and trustworthy development.

Human experts possess domain knowledge, experience, and intuition that can complement the capabilities of AI algorithms. By combining human insights with AI algorithms, cybersecurity professionals can improve the accuracy, efficiency, and effectiveness of threat detection and mitigation. Additionally, human experts can provide context, interpret findings, and make informed decisions based on the outputs of AI systems.

Moreover, collaboration between AI and human experts is essential for continuously improving and updating AI algorithms. Human experts can provide feedback, validate results, and contribute to the development and refinement of AI-powered cybersecurity systems. This iterative collaboration ensures that AI algorithms remain adaptive, resilient, and effective in combating emerging cyber threats.

Ethical Considerations in AI and Cybersecurity

As AI technologies become more prevalent in cybersecurity, it is crucial to address the ethical considerations associated with their use. Ethical considerations play a significant role in ensuring the safe and secure development of AI-powered cybersecurity systems.

One of the key ethical considerations in AI and cybersecurity is the potential for bias and discrimination. AI algorithms are trained on historical data, which may reflect existing biases and inequalities. If not properly addressed, these biases can be perpetuated and result in discriminatory outcomes. Therefore, designers and developers must be conscious of potential biases and strive to minimize them through appropriate training data and algorithm design.

Additionally, AI-powered cybersecurity systems should respect user privacy and protect personal information. Data breaches and unauthorized access can have severe consequences, including identity theft and financial loss. Developers must adhere to data protection standards, implement robust encryption techniques, and regularly update security measures to safeguard user data.

Another important ethical consideration is the transparency and accountability of AI algorithms. As AI-powered cybersecurity systems make critical decisions and predictions, it is essential to have mechanisms in place that allow for oversight, accountability, and recourse. This can include auditability, traceability, and the ability to challenge and contest AI decisions when necessary.

In conclusion, the collaboration between AI and cybersecurity holds immense potential for detecting and mitigating cyber threats. However, it is crucial to ensure the safe, secure, and trustworthy development of AI-powered cybersecurity systems. This involves securing training data and models, evaluating AI algorithms for robustness and resilience, incorporating explainable AI techniques, fostering collaboration between AI and human experts, and addressing ethical considerations. By following these strategies, developers can harness the power of AI while maintaining the highest standards of security and trust in the field of cybersecurity.

For more information on the latest advancements in AI and cybersecurity, you can visit www.example.com.

Key Takeaways

- AI plays a critical role in cybersecurity by helping to detect and prevent cyber threats.

- Developers must prioritize security measures to ensure that AI systems are not vulnerable to attacks.

- Trustworthiness is crucial for AI systems, and efforts should be made to ensure transparency and accountability.

- The use of AI in cybersecurity can enhance threat intelligence and response capabilities.

- Ongoing research and development are necessary to stay ahead of evolving cyber threats.

Artificial Intelligence (AI) plays a crucial role in cybersecurity, ensuring the development of safe, secure, and trustworthy systems. AI technology can detect and prevent cyber threats by analyzing vast amounts of data, identifying patterns, and predicting potential attacks.

AI-powered cybersecurity solutions can enhance the protection of sensitive information, detect malicious activities, and respond quickly to emerging threats. By leveraging AI, organizations can strengthen their defenses and stay one step ahead of cybercriminals, safeguarding their digital assets and maintaining trust in the digital world.